Gradle Config Cache Reuse on CI

History, optimization iterations, and instructions on how to get Gradle Config Cache reuse on CI.

When I gave my Droidcon London talk about Android CI in 2023, I said I would follow up with a blog post that explains specifically how to accomplish Configuration Cache (CC) reuse on CI and what it gets you. I've been working on it since then and one of the issues has been how hard it is to make a generic setup, but better late than never right?

What is Gradle Configuration Cache?

Gradle Configuration Cache (CC) is a feature that saves the result of the configuration phase across builds. The configuration phase is where Gradle evaluates your build scripts, resolves dependencies, and creates the task graph - often a significant portion of total build time, especially for large projects. By caching this phase, subsequent builds can skip directly to execution, significantly reducing build times.

First Implementation

I put this together on CircleCI while I was at Hinge while I was at Droidcon NYC 2023 and presented it at London a couple months later. The approach involved:

- Identifying the essential directories in the Gradle User Home that contained the configuration cache data.

- Creating a cache key that incorporated the project state and Gradle version.

- Configuring CircleCI to save and restore this cache before and after builds.

- Optimizing it by doing some directory removal to fit how CircleCI cache works.

Even in this earliest implementation I noticed two very important things:

- Implementing input hashing as close as possible to how Gradle CC does it allows me to predict with high accuracy whether a cache entry will result in CC reuse.

- Partial matches still resulted in some benefit as long as convention plugin sources hadn't changed - the dependency cache and some amount of transforms sped up the execution phase of the build.

From CC-Keyore to Environment Variable

One of the key early challenges with Gradle CC is that it captures and serializes the entire build state - including environment variables that might contain sensitive information like API keys. This presented a security risk when reusing configuration cache across builds because you're essentially carrying around those secrets. This became an issue on GitHub Actions where configuration cache was reused by the official Gradle Build Action and opened up a vulnerability where anyone with access to create PRs to run actions could read or expose secrets that they would otherwise not be privy to.

The Gradle team quickly addressed this by implementing an encrypted keystore file within the Gradle User Home directory, separate from other traditionally cached directories, and disabled caching it by default. This encryption layer mitigates the risk of exposing sensitive information... but we still all wanted a straightforward method to reuse it on CI.

GitHub Actions Implementation

I adapted my setup for GitHub Actions. This version addressed the security considerations with encryption by working with Gradle engineers to plan and then use an environment variable to make it possible for anyone to easily provide their own.

1. Cache Configuration: Use the GitHub Actions cache API to store the configuration cache directories

2. Encryption Support: Provide the encryption key using GitHub secrets

3. Versioning: Version the cache based on Gradle version and project state

4. Security: Ensure PR-triggered workflows don't expose secrets

This approach ensures that:

1. The configuration cache is properly encrypted using the provided key.

2. The cache key is unique to the project state and Gradle version.

3. Only the essential directories are cached for optimal performance.

By late 2024 I was pretty adept at implementing this type of setup. So when I started a new job that needed me to bring everything I learned to lower CI pipeline wall time it was one of the first things I proved out and deployed.

Network Speed

I noticed that GitHub Actions allowed transfer speeds between 150-300mb/s for cache restoration. This is about what you can attain for an AWS S3 bucket download, so short of pre-downloading these cache entries to disk before a CI job starts you're not going to get much faster speeds. You could look into things like a CDN but I am skeptical that the cost is worth it.

Compression Algorithms

The compression algorithm affects several aspects of configuration cache performance:

1. Storage Size: Better compression means less disk space used and less data transferred over the network

2. Cache Write Time: Faster compression reduces the time to save the cache

3. Cache Read Time: Faster decompression reduces build startup time

4. Network Transfer: Higher compression ratios reduce the time to upload/download caches on CI

I did a comparison using lzbench tool the main compression algorithms I considered using on the mostly binary data of Configuration Cache. You can reproduce the experiment I ran by forking this repo or just read the log output:

Run mkdir -p results

1000+0 records in

1000+0 records out

1048576000 bytes (1.0 GB, 1000 MiB) copied, 3.42281 s, 306 MB/s

Running benchmarks on compression tools...

lz4fast compr iter=2 time=0.12s speed=18148.11 MB/s

lz4fast compr iter=4 time=0.23s speed=18451.05 MB/s

lz4fast compr iter=6 time=0.35s speed=17295.97 MB/s

lz4fast compr iter=8 time=0.48s speed=16480.74 MB/s

lz4fast compr iter=10 time=0.60s speed=17013.81 MB/s

lz4fast compr iter=12 time=0.73s speed=16916.74 MB/s

lz4fast compr iter=14 time=0.85s speed=16497.49 MB/s

lz4fast compr iter=16 time=0.97s speed=17099.23 MB/s

lz4fast decompr iter=1 time=0.24s speed=4380.21 MB/s

lz4fast decompr iter=2 time=0.57s speed=4348.17 MB/s

lz4fast decompr iter=3 time=0.90s speed=4445.86 MB/s

lz4fast decompr iter=4 time=1.23s speed=4390.31 MB/s

lz4fast decompr iter=5 time=1.56s speed=4404.67 MB/s

lz4fast decompr iter=6 time=1.89s speed=4394.06 MB/s

lz4fast decompr iter=7 time=2.22s speed=4441.99 MB/s

lz4fast decompr iter=8 time=2.55s speed=4383.07 MB/s

lz4fast decompr iter=9 time=2.88s speed=4287.02 MB/s

lz4fast decompr iter=10 time=3.22s speed=4276.68 MB/s

lz4fast decompr iter=11 time=3.56s speed=4397.09 MB/s

lz4fast decompr iter=12 time=3.89s speed=4326.29 MB/s

lz4fast decompr iter=13 time=4.22s speed=4413.33 MB/s

lz4fast decompr iter=14 time=4.55s speed=4419.21 MB/s

lz4fast decompr iter=15 time=4.89s speed=4308.41 MB/s

lz4fast decompr iter=16 time=5.22s speed=4428.18 MB/s

lz4fast decompr iter=17 time=5.54s speed=4432.40 MB/s

lz4fast decompr iter=18 time=5.87s speed=4343.01 MB/s

lzbench 2.0.2 | GCC 13.3.0 | 64-bit Linux | AMD EPYC 7763 64-Core Processor

Compressor name Compress. Decompress. Compr. size Ratio Filename

lz4 1.10.0 --fast -1 18656 MB/s 4489 MB/s 1052688064 100.39 testfile

lz4fast decompr iter=19 time=6.19s speed=4489.73 MB/s

done... (cIters=10 dIters=20 cTime=1.0 dTime=2.0 chunkSize=1706MB cSpeed=0MB)

zstd_fast compr iter=1 time=0.24s speed=4331.79 MB/s

zstd_fast compr iter=2 time=0.48s speed=4318.53 MB/s

zstd_fast compr iter=3 time=0.73s speed=4305.59 MB/s

zstd_fast compr iter=4 time=0.97s speed=4351.03 MB/s

zstd_fast compr iter=5 time=1.21s speed=4398.94 MB/s

zstd_fast compr iter=6 time=1.45s speed=4354.88 MB/s

zstd_fast compr iter=7 time=1.69s speed=4306.39 MB/s

zstd_fast compr iter=8 time=1.93s speed=4334.72 MB/s

zstd_fast compr iter=9 time=2.17s speed=4368.03 MB/s

zstd_fast decompr iter=2 time=0.17s speed=12294.18 MB/s

zstd_fast decompr iter=4 time=0.43s speed=12563.39 MB/s

zstd_fast decompr iter=6 time=0.68s speed=13375.19 MB/s

zstd_fast decompr iter=8 time=0.92s speed=13718.94 MB/s

zstd_fast decompr iter=10 time=1.17s speed=12785.95 MB/s

zstd_fast decompr iter=12 time=1.43s speed=13260.96 MB/s

zstd_fast decompr iter=14 time=1.68s speed=12864.40 MB/s

zstd_fast decompr iter=16 time=1.93s speed=13570.92 MB/s

lzbench 2.0.2 | GCC 13.3.0 | 64-bit Linux | AMD EPYC 7763 64-Core Processor

It turns out GitHub Actions already uses zstd for its built-in cache mechanism, which aligns well with my findings that zstd offers the best overall compression/decompression speed balance for CI environment caching of binary data. Zac landed a zstd wrapper for BuildKite, take a look at that if this option isn't currently available to your build system.

Dry Run for Smaller Transforms

Nicklas Ansman told me about how he'd used --dry-run with the same Gradle tasks to create much smaller ~/.gradle/caches/<version>/transforms directory. When I tried it out it cut the size of the transforms directory in half or more. Each of these improvements iteratively made Gradle Config Cache more and more feasible and maintainable on CI.

The Failures and Untested

- AWS S3 Express One was about the same speed as AWS S3, but because its set to a single AZ we had more caches misses than hits.

- I still have yet to try and profile the difference between AWS EBS volumes and SSD ones. There is definitely a performance win to be had there for both configuration cache and overall Gradle performance since it likes to generate and access hundreds of thousands of files.

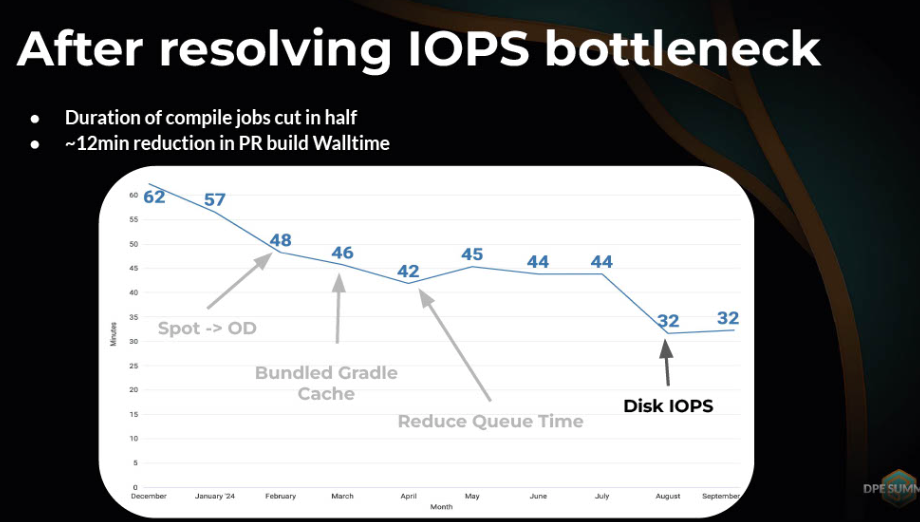

Maybe someone else can try this out.Update: Michael Yoon gave a talk on this last year at Gradle DPE. Thanks Josh! Its clearly worth pursuing.

- zstd training - since the build I worked on changes pretty often I don't think it makes sense to try, but a more stable codebase that doesn't change module build files all the time it might make more sense.

Future Improvements I'm watching

The Gradle team is actively working on improving Configuration Cache performance and usability as they have for the past several years. I'm watching several feature requests in the Gradle issue tracker to know when to pursue the next optimization:

1. Native Cross-machine Sharing #13510: Official support for sharing configuration cache between machines could eliminate the need for custom solutions.

2. Better Compression Algorithms #12809 & #30332: Gradle has considered using zstd for cache compression, which would improve both compression ratio and speed. This is more interesting to me because of the compression algorithm comparisons I've already done. Trying to find the time to contribute myself but I've been distracted by MCP lately...

Config Cache Reuse Checklist

First you get Configuration Cache reuse working locally. This is going to vary so much on a project-to-project basis, but Gradle does have documentation and advice in its community Slack for how to get it working.

When you're ready for CI you'll add the following to your Gradle CI job execution steps:

- Hash all inputs for your Gradle tasks, including everything passed to invoke Gradle. That includes the JDK, JVM args, referenced environment variables, etc.

- Use the hash to query your storage solution of choice. I recommend AWS S3 and most CI providers make that easy or use it under the hood anyway.

- Depending on availability of config cache entries

- When you cannot restore a config cache entry from your storage solution

- Perform

--dry-runof your Gradle tasks - Create archives from the directories listed

- Perform your Gradle tasks

- Measure (and maybe assert) whether a configuration cache hit happened

- Upload config cache entry archives to your storage solution

- Perform

- When you get a config cache entry restored

- Perform your Gradle tasks. If it was only a partial hit, do not enable config cache

- Measure whether a configuration cache hit happened

- When you cannot restore a config cache entry from your storage solution

- Add the config cache HTML report as a CI artifact so you can debug issues.

Here are the directories that need to be cached today for CC reuse. Some, like your-convention-plugins are extremely project specific. Others like groovy-dsl and kotlin-dsl just matter whether you have Groovy, Kotlin DSL, or a mixture of build script formats.

./gradle/<gradle-version>

./gradle/configuration-cache

./your-convention-plugins/**/build

~/.gradle/caches/<gradle-version>/dependencies-accessors

~/.gradle/caches/<gradle-version>/generated-gradle-jars

~/.gradle/caches/<gradle-version>/groovy-dsl

~/.gradle/caches/<gradle-version>/kotlin-dsl

~/.gradle/caches/<gradle-version>/transforms

~/.gradle/caches/jars-9

~/.gradle/caches/modules-2Build System Recommendations

Gradle Configuration Cache reuse on CI environments can dramatically improve build times for small incremental changes when implemented, however today the investment required to see the win is pretty high. I'd really rather see Gradle Develocity just do this out of the box.

Because my method builds in input hashing and Configuration Cache bases its reuse on matching that I have been able to achieve a very high hit rate in practice. The best hit rate in 2023 I acheived was around 60% including complete and partial cache hits. Today the builds I am responsible for have a hit rate that hovers around 95%, with 50% of that being complete cache hits. With a configuration phase that takes 3+ minutes its absolutely worth the investment.

Gradle CC on CI has overhead. It is expensive to setup and maintain the infrastructure to get the performance win, and definitely does not make sense if you're a small shop with fewer modules. If you're working in a medium-to-large scale (200+ modules) Android repo you should be able to achieve the performance improvements in your CI pipeline - but again you should first look at your Gradle build scans for what your configuration time is before making this investment.

This process also leads to a greater understanding of your project's build and sets up reusing the local build cache on CI - but that's a blog post for another time.

Acknowledgements

For all the folks that helped me along the way, both in getting this working and pushing me to share my learnings. Thanks y'all!

Nicklas Ansman, Inaki Villar, Zac Sweers, John Rodriguez , Nelson Osacky, Adam Ahmed, John Buhanan, John Nimis, Anthony Restaino, Nick Doglio, Andy Rifken